Understanding NLP Fundamentals for Chatbots

Before diving into the technical aspects of chatbot training, it’s essential to grasp the core NLP concepts that power modern conversational AI. These fundamentals form the foundation upon which truly helpful and responsive chatbots are built.

Key NLP Components for Chatbots

A well-designed NLP chatbot relies on several critical components working in harmony:

- Intent recognition – Identifying what the user is trying to accomplish (e.g., booking a meeting, requesting information, reporting an issue)

- Entity extraction – Pulling specific pieces of information from user inputs (names, dates, locations, product types)

- Context management – Maintaining conversation history to provide contextually relevant responses

- Sentiment analysis – Determining user emotions to adapt responses accordingly

- Language understanding – Comprehending the meaning behind user messages despite variations in wording

Each of these elements requires specific training approaches and data, working together to create a cohesive conversational experience.

Advanced AI platforms like Gibion can help streamline the implementation of these components into your chatbot architecture.

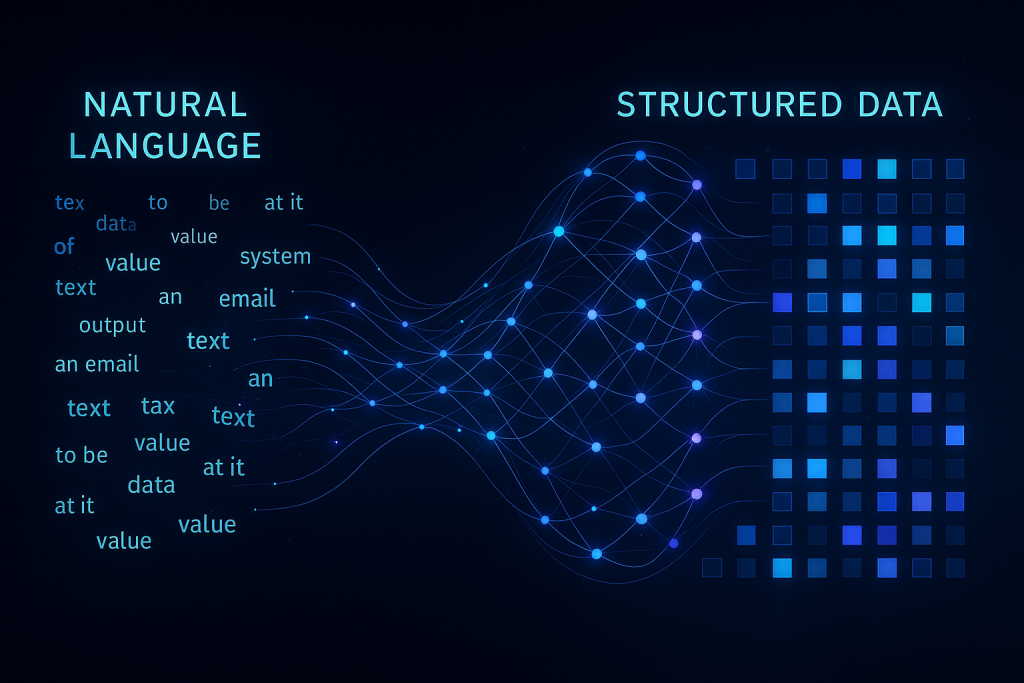

How NLP Transforms Text into Actionable Data

The magic of NLP happens when raw text is processed through several linguistic layers:

| Processing Layer |

Function |

Example |

| Tokenization |

Breaking text into words or subwords |

“I need to reschedule” → [“I”, “need”, “to”, “reschedule”] |

| Part-of-speech tagging |

Identifying grammatical elements |

“Book a meeting” → [Verb, Article, Noun] |

| Dependency parsing |

Establishing relationships between words |

Determining “tomorrow” modifies “meeting” in “schedule a meeting tomorrow” |

| Named entity recognition |

Identifying specific entity types |

Recognizing “May 21st” as a date and “Conference Room A” as a location |

| Semantic analysis |

Understanding meaning and intent |

Recognizing “Can you move my 2pm?” as a rescheduling request |

This linguistic processing pipeline transforms unstructured text inputs into structured data that chatbots can act upon, making the difference between a bot that merely responds and one that truly understands.

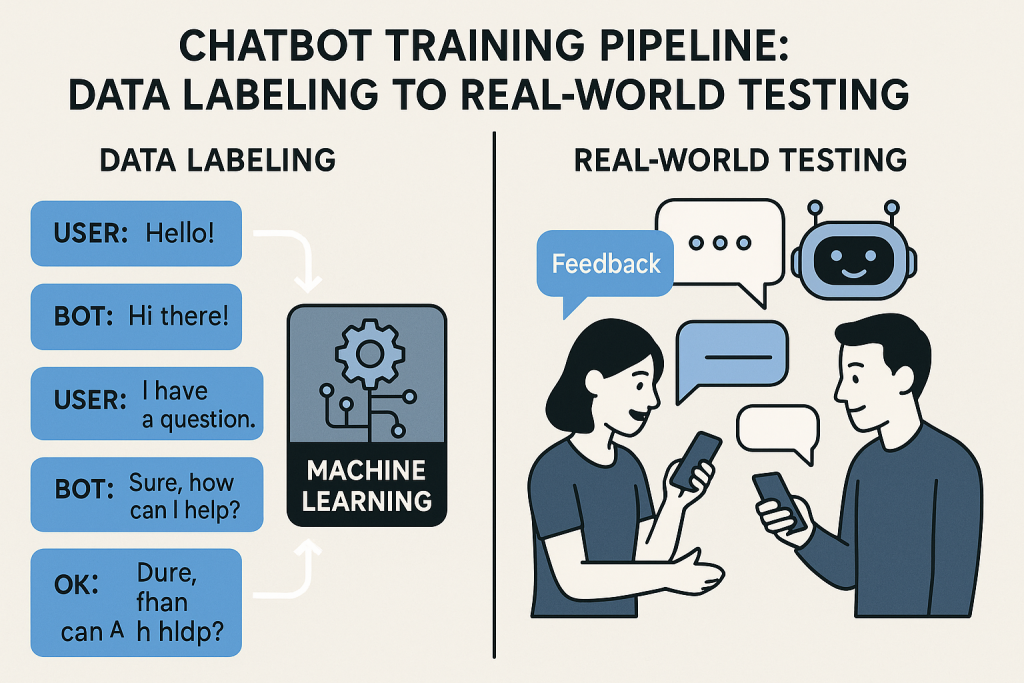

Data Collection and Preparation for Training

The quality of your training data directly impacts your chatbot’s performance. This crucial foundation determines whether your bot will understand users or leave them frustrated.

Creating a Diverse Training Dataset

An effective NLP chatbot needs exposure to the wide variety of ways users might express the same intent. Here’s how to build a comprehensive dataset:

- User query collection methods

- Analyze customer support logs and chat transcripts

- Conduct user interviews and focus groups

- Implement beta testing with real users

- Review industry-specific forums and social media

- Conversation flow mapping – Chart typical conversation paths users might take

- Query variation techniques – Generate alternative phrasings for each intent

- Domain-specific terminology – Include industry jargon and specialized vocabulary

- Data annotation best practices – Label data consistently with clear guidelines

Remember, your chatbot will only be as good as the variety of examples it’s exposed to during training. A diverse dataset helps ensure your bot can handle the unpredictability of real-world conversations.

Data Cleaning and Preprocessing Techniques

Raw conversational data is messy. Here’s how to refine it for optimal training results:

- Text normalization – Converting all text to lowercase, handling punctuation consistently

- Handling misspellings – Incorporating common typos and autocorrect errors

- Removing noise – Filtering out irrelevant information and filler words

- Dealing with slang and abbreviations – Including conversational shortcuts like “omg” or “asap”

- Data augmentation – Creating additional valid training examples through controlled variations

This cleaning process transforms raw, inconsistent data into a structured format your model can effectively learn from.

Using pre-defined templates can help streamline this process, especially for common use cases.

Choosing the Right NLP Model Architecture

Not all NLP models are created equal, and selecting the right architecture for your specific needs is crucial for chatbot success.

Rule-Based vs. Machine Learning Approaches

There are several distinct approaches to powering your chatbot’s understanding:

| Approach |

Strengths |

Limitations |

Best For |

| Rule-Based |

Predictable behavior, easier to debug, works with limited data |

Rigid, can’t handle unexpected inputs, maintenance-heavy |

Simple use cases with limited scope, highly regulated industries |

| Statistical ML |

Better generalization, handles variations, improved with more data |

Requires substantial training data, occasional unexpected behavior |

Medium-complexity use cases with moderate data availability |

| Hybrid |

Combines predictability with flexibility, fallback mechanisms |

More complex to implement, needs careful integration |

Complex domains with some critical paths that require certainty |

Many successful implementations start with a hybrid approach, using rules for critical functions while leveraging machine learning for general conversation handling.

Deep Learning Models for Advanced Understanding

For sophisticated chatbot applications, deep learning models offer unprecedented language understanding capabilities:

- Transformer architectures – The foundation of modern NLP, enabling attention to different parts of input text

- BERT and GPT implementations – Pre-trained models that capture deep linguistic knowledge

- Fine-tuning pre-trained models – Adapting existing models to your specific domain

- Custom model development – Building specialized architectures for unique requirements

- Resource requirements – Balancing model complexity with available computing resources

While larger models like GPT can deliver impressive results, they often require significant resources. For many business applications, smaller fine-tuned models provide the best balance of performance and efficiency.