Event-Driven Workflows: Building Reactive Automation Systems

In today’s fast-paced digital landscape, businesses need automation systems that can respond instantly to changing conditions. Traditional sequential workflows—where processes follow predetermined steps from start to finish—simply can’t keep up with the dynamic nature of modern business operations. This is where event-driven AI workflows shine, offering a paradigm shift in how we approach automation by enabling systems to react intelligently to real-time triggers.

Whether you’re looking to streamline operations, enhance customer experiences, or gain competitive advantages through faster response times, event-driven workflows powered by AI offer transformative potential. In this comprehensive guide, we’ll explore how these reactive automation systems work, their applications, and implementation strategies to help your organization harness their full potential.

Understanding Event-Driven Workflows

Before diving into implementation details, it’s essential to understand what makes event-driven workflows fundamentally different from traditional approaches and why they’re increasingly becoming the backbone of modern automation systems.

From Sequential to Reactive: A Paradigm Shift

Traditional workflows operate in a sequential fashion—following predefined steps in a specific order. While predictable, these workflows struggle with flexibility and real-time responsiveness. Event-driven workflows represent a complete paradigm shift in how we think about process automation.

In an event-driven system, events serve as the primary drivers of action. An event is simply a significant change in state or an occurrence that the system recognizes as important. These can range from user actions (clicking a button) to system alerts (server load exceeding thresholds) to business events (inventory dropping below minimum levels).

The core principles that define event-driven thinking include:

- Decoupling: Components only need to know about the events they care about, not about other components

- Asynchronous processing: Events are processed independently of their sources

- Real-time responsiveness: Systems react immediately when events occur

- Scalability: Event processors can scale independently based on demand

The shift to event-driven workflows offers several compelling benefits:

| Benefit | Description |

|---|---|

| Increased agility | Systems can adapt quickly to changing conditions without requiring redesign |

| Better scalability | Components can be scaled independently based on event volume |

| Improved resilience | Failures in one component don’t necessarily affect others |

| Enhanced responsiveness | Actions trigger immediately when relevant events occur |

Anatomy of an Event-Driven Workflow

To understand how event-driven systems function, we need to examine their key components:

Event producers are the sources that generate events. These can be applications, services, IoT devices, user interactions, or system monitors. For example, an e-commerce platform might produce events when customers add items to their cart, place orders, or abandon checkout.

Event consumers are the components that react to events. They subscribe to specific event types and execute predefined actions when those events occur. In our e-commerce example, a notification service might consume “order placed” events to send confirmation emails.

Event channels (sometimes called buses or brokers) serve as the communication infrastructure between producers and consumers. These middleware systems handle event routing, delivery, and often provide features like persistence and replay capabilities. Popular examples include Apache Kafka, RabbitMQ, and AWS EventBridge.

Event processing patterns typically fall into several categories:

- Simple event processing: Direct reactions to individual events

- Event stream processing: Analysis of continuous flows of events

- Complex event processing (CEP): Recognition of patterns across multiple events

- Event choreography: Distributed coordination through events

State management becomes particularly important in event-driven systems, as the current state must be derived from the history of events rather than stored directly. This approach, known as event sourcing, provides powerful capabilities for auditing, debugging, and system reconstruction.

The Role of AI in Event-Driven Workflows

Artificial intelligence transforms event-driven systems from simple reactive mechanisms to sophisticated platforms capable of intelligent decision-making and proactive operation.

Intelligent Event Processing

AI significantly enhances how systems process and respond to events:

Event classification and prioritization: Machine learning algorithms can automatically categorize incoming events by their importance, urgency, and relevance. This ensures critical events receive immediate attention while less important ones are handled appropriately.

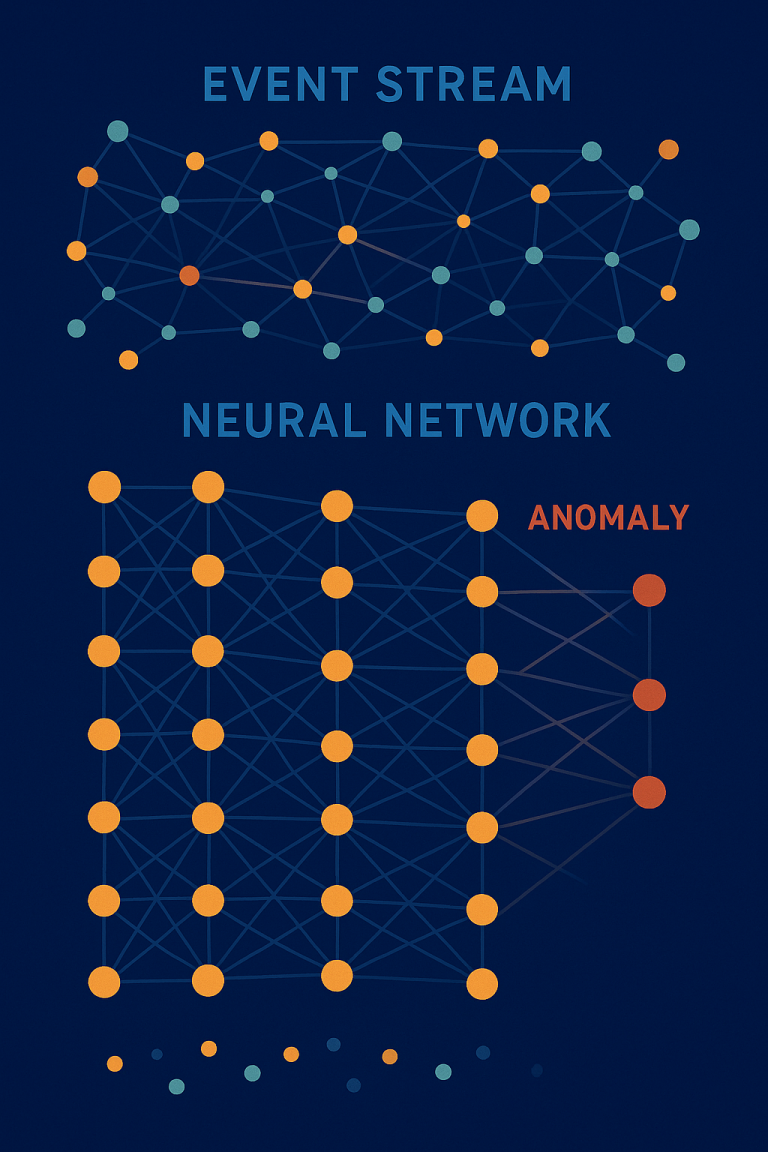

Complex event processing (CEP): AI enables the identification of meaningful patterns across seemingly unrelated events. For example, a combination of unusual login attempts, changed account details, and atypical transaction patterns might indicate fraud—something that would be difficult to detect by examining each event in isolation.

Machine learning for pattern recognition: As systems process more events over time, they can learn normal patterns and improve their ability to detect anomalies. This adaptive learning capability makes event-driven workflows increasingly effective.

Anomaly detection in event streams: AI can monitor continuous streams of events to identify outliers that deviate from expected patterns. This capability is especially valuable in security, operations monitoring, and quality control scenarios.

Predictive Event Generation

Perhaps the most transformative aspect of AI in event-driven workflows is the ability to move from purely reactive to proactive automation:

Anticipatory workflows: AI models can predict when events are likely to occur and trigger workflows in advance. For instance, a system might detect patterns indicating a customer is about to churn and proactively initiate retention measures.

AI-driven event forecasting: By analyzing historical event data and contextual information, AI can forecast future events with impressive accuracy. This enables businesses to prepare resources, optimize operations, and make strategic decisions before events actually occur.

The evolution from reactive to proactive automation represents a significant competitive advantage:

| Reactive Automation | Proactive Automation |

|---|---|

| Responds after events occur | Acts before events happen |

| Manages consequences | Prevents issues or capitalizes on opportunities |

| Operates in real-time | Operates ahead of time |

| Driven by actual events | Driven by predicted or synthetic events |

Synthetic events represent an innovative approach where AI creates events that don’t correspond to actual occurrences but serve to trigger beneficial workflows. For example, an AI might generate a “potential stock shortage” event based on trend analysis, even though inventory hasn’t yet reached critical levels.

Building Event-Driven Workflow Architectures

Implementing effective event-driven systems requires careful architectural planning and selection of appropriate technologies.

Event-Driven Architecture Patterns

Several architectural patterns have emerged as best practices for building event-driven systems:

Event sourcing maintains a log of all events that have occurred in the system as the definitive record of truth. The current state is derived by processing this event log rather than storing state directly. This approach enables powerful capabilities for auditing, debugging, and system reconstruction.

CQRS (Command Query Responsibility Segregation) separates operations that modify data (commands) from operations that read data (queries). This pattern works particularly well with event sourcing and allows each aspect of the system to be optimized independently.

Pub/sub systems implement the publisher-subscriber pattern where event publishers have no knowledge of subscribers. Events are published to channels, and subscribers receive only the events they’re interested in. This creates loose coupling between components, enhancing system flexibility.

Microservices and event-driven communication: Event-driven approaches pair naturally with microservices architectures, where services communicate primarily through events rather than direct API calls. This enhances decoupling and enables greater scalability and resilience.

Technology Stack for Event-Driven Workflows

Building effective event-driven AI workflows requires selecting the right technologies for your specific needs:

- Event streaming platforms: Technologies like Apache Kafka, Amazon Kinesis, or Google Pub/Sub provide the backbone for high-throughput, distributed event processing

- Message brokers: Solutions like RabbitMQ, ActiveMQ, or Azure Service Bus handle reliable message delivery between components

- Serverless functions: AWS Lambda, Azure Functions, or Google Cloud Functions offer ideal environments for event handlers that need to scale dynamically

- Event processing frameworks: Apache Flink, Spark Streaming, or Databricks provide sophisticated capabilities for complex event processing

- AI/ML services: Cloud-based services like Google Vertex AI, AWS SageMaker, or Azure Machine Learning integrate machine learning capabilities into event workflows

The ideal architecture typically combines several of these technologies, with each handling specific aspects of the overall event processing pipeline.

Real-World Applications of Event-Driven Workflows

The power of event-driven AI workflows becomes apparent when examining their practical applications across industries.

Financial Services and Fraud Detection

Financial institutions face constant threats from fraudulent activities that continuously evolve in sophistication. Event-driven AI workflows provide powerful defenses:

Real-time transaction monitoring: Every transaction generates events that are instantly analyzed for suspicious patterns. AI models can evaluate dozens of risk factors in milliseconds, flagging potential fraud for further investigation or automatic blocking.

Multi-event fraud patterns: Advanced detection looks beyond individual transactions to identify patterns across multiple events. For example, a series of small transactions followed by a large one might indicate a criminal “testing” a stolen card before making a major purchase.

Regulatory compliance automation: Financial institutions must comply with complex regulatory requirements. Event-driven workflows can automatically trigger compliance checks, documentation, and reporting based on transaction events, reducing both risk and operational overhead.

IoT and Smart Manufacturing

The Industrial Internet of Things (IIoT) generates massive volumes of event data that can drive intelligent automation:

Sensor data processing workflows: Manufacturing equipment equipped with sensors continuously generates event streams. AI-powered workflows analyze these streams to monitor performance, detect anomalies, and trigger appropriate responses.

Predictive maintenance: By recognizing patterns that precede equipment failures, AI can generate synthetic “maintenance needed” events before actual breakdowns occur, dramatically reducing downtime and repair costs.

Supply chain event management: Modern supply chains generate events at every stage—from production to delivery. Event-driven workflows enable real-time tracking, intelligent routing, and proactive handling of disruptions across complex global networks.

Quality control automation: Vision systems and sensors can generate events when detecting potential quality issues. AI-powered workflows can automatically adjust production parameters or flag items for human inspection.

Customer Experience Optimization

Perhaps the most visible application for many businesses is in creating responsive, personalized customer experiences:

Real-time personalization: Customer interactions generate events that trigger immediate personalization. When a customer views a product, abandons a cart, or completes a purchase, event-driven workflows can instantly update recommendations, content, and offers across all channels.

Context-aware engagement: AI can correlate events across channels to understand customer context. A customer who researches a product on mobile, then later visits the website from desktop, can receive contiguous experiences that acknowledge their journey.

Customer journey orchestration: Complex customer journeys can be orchestrated through event-driven workflows that respond to customer actions in real-time, delivering the right message through the right channel at precisely the right moment.

Implementation Strategies and Best Practices

Successfully implementing event-driven AI workflows requires both technical expertise and organizational change management.

Event-Driven Thinking: A Cultural Shift

The transition to event-driven thinking represents a significant cultural shift for many organizations:

From process-centric to event-centric thinking: Teams need to reframe their understanding of systems around events rather than processes. This means identifying key business events and designing systems that respond appropriately to them.

Building event-driven teams: Organizations may need to restructure teams around event domains rather than functional areas. Cross-functional teams that understand both the business significance and technical handling of specific event types often prove most effective.

Governance models: As events become the primary medium of integration between systems, governance becomes crucial. Organizations need clear policies for event ownership, schema management, access control, and data privacy.

Technical Implementation Roadmap

A phased approach to implementation helps manage complexity and demonstrate value early:

- Event storming: Begin with collaborative workshops to identify key business events, their triggers, and required responses

- Event schema design: Define standardized formats for your events to ensure consistency and interoperability

- Pilot implementation: Start with a bounded context where event-driven approaches can deliver significant value

- Testing framework: Develop comprehensive testing strategies for event-driven systems, including event simulation and replay capabilities

- Monitoring and observability: Implement tools to track event flows, processing latency, and system health

- Scaling strategy: Plan for horizontal scaling of event processing components to handle growing event volumes

Challenges and Future Trends

While event-driven AI workflows offer tremendous potential, they also present unique challenges that organizations must address.

Common Implementation Challenges

Be prepared to address these common hurdles:

- Event consistency and ordering: In distributed systems, ensuring consistent event ordering can be technically challenging but critical for many business processes

- Debugging complex event flows: When issues occur, tracing the chain of events that led to the problem requires sophisticated observability tools

- Managing event schema evolution: As business requirements change, event schemas must evolve while maintaining compatibility with existing consumers

- Performance optimization: High-volume event streams require careful performance tuning to prevent bottlenecks and ensure timely processing

The Future of Event-Driven Workflows

Looking ahead, several trends are shaping the evolution of event-driven AI workflows:

Edge computing and local event processing: As computing power moves closer to event sources (IoT devices, retail locations, vehicles), more event processing will occur at the edge, reducing latency and bandwidth requirements while enabling faster responses.

AI-generated workflow optimization: Meta-learning AI systems will increasingly analyze event patterns and workflow performance to suggest or automatically implement optimizations to the workflows themselves.

Event-driven business processes: The event-driven paradigm is expanding beyond technical architecture to influence how businesses design their core processes, leading to more responsive, adaptive organizations.

Autonomous systems and self-healing workflows: The ultimate evolution will be fully autonomous systems that not only react to events but can reconfigure their own event handling logic based on changing conditions and objectives.

Conclusion

Event-driven AI workflows represent a fundamental shift in how we design automation systems—moving from rigid, sequential processes to flexible, intelligent reactions to real-time events. By combining event-driven architectures with artificial intelligence, organizations can create systems that not only respond instantly to changes but can anticipate needs and proactively address opportunities and challenges.

The journey to fully realized event-driven AI workflows may be challenging, but the competitive advantages—greater agility, enhanced customer experiences, operational efficiencies, and new business capabilities—make it well worth the investment. By starting with clear business objectives, building the right technical foundations, and embracing the cultural shift to event-centric thinking, your organization can harness the transformative power of reactive automation.

Are you ready to transform your business processes with event-driven AI workflows? The future of intelligent, responsive automation awaits.